Mixing With Headphones 2

In the second instalment of this six-part series, Greg Simmons explores the differences between mixing with speakers and mixing with headphones.

In the first instalment of this six-part series we explored the ascent of headphone listening, culminating in the current situation where headphone listening has supplanted speaker listening for the vast majority of music purchasing decisions and active music consumption. As audio professionals we would be foolish to underrate the significance of headphones in our mixing and monitoring decisions, but how do we reduce our reliance on an institutionalised technology – speakers – that has ultimately become irrelevant to the majority of the music consuming market? We can’t simply announce that we’re abandoning speakers for headphones, because there are significant differences between mixing through speakers and mixing through headphones.

Speaker reproduction brings a lot of changes to our mix; what we hear from the speakers is not what is coming out of the mixing console or DAW. The sound we hear at our monitoring position has had the frequency response and distortion of our speakers embedded into it, the acoustics of our listening room superimposed upon it, and possibly has comb-filtering introduced to it due to reflections off our work surfaces.

If the tonality of your mixes does not translate acceptably to other speakers outside of your mixing room, it means your mixing decisions are being influenced by frequency response issues coming from your monitors and/or your mixing room’s acoustics. If your mixes have significantly different levels of reverberation and/or strange panning issues when heard on other speakers outside of your mixing room, it means your mixing decisions are being influenced by your mixing room’s reverberation and first order reflections. If the tonality of some sounds within your mix (particularly the snare) changes significantly when you lean forward or backward from your normal mixing position it means you’ve got comb filtering off your work surface, and you’re wasting your time trying to find the right mixing position because it probably doesn’t exist…

Most contemporary monitor speakers provide acceptable performance within their intended bandwidth, which means the problems described above are not caused by your monitors, and therefore buying new monitors is not the solution (unless you’re living in that acoustic fantasy world where gut-shaking dance-floor subsonics come from shoebox-sized desktop speakers). The smart solution is to seek the advice of an acoustician. Alternatively, you could stop relying on big monitor speakers and the acoustic treatments they require, and switch to mixing on headphones – which is, coincidentally, what this series is all about. So read on…

VARIABLE COMPENSATIONS

Speaker listening introduces a lot of variables that don’t exist with headphone listening. Compensating for those variables with the tiny on-going tweaks and refinements that take place during the course of a mix – in response to cross-referencing with other speakers, changing seating posture, feedback from others inside the room but outside of the sweet spot, returning to the mix after a break, and so on – tends to make our mixes more resilient and thereby improves their translation across numerous playback systems.

A mix made only on speakers will usually need very little tweaking to sound ‘right’ when heard through headphones, even though it might not take advantage of all that headphones have to offer. A mix made only on headphones can take advantage of all that headphones have to offer, but will often need considerable tweaking to sound ‘right’ when heard through speakers.

How can we make ‘market relevant’ mixes that exploit headphone’s strengths without losing the ‘tweaking-for-the-variables’ benefits that speaker mixing introduces? We can start by understanding a) how human hearing works, b) what we hear and feel when listening to speakers, and c) what we don’t hear and feel when listening to headphones…

HOW DOES HUMAN HEARING WORK?

Human beings have two ears, one on either side of the head, to capture two slightly different versions of the same sound. The ear/brain system uses the differences between these two versions of the same sound to determine where that sound is coming from in a process called ‘localisation’.

The illustration below shows a listener receiving sound information from a sound source located to the left of centre. There are three ‘difference’ mechanisms the ear/brain system uses to localise the sound source, and, for this example, they all occur because the right ear is further from the sound source than the left ear.

…what we hear from the speakers is not what is coming out of the mixing console or DAW.

Firstly, the sound will arrive at the right ear a short time after it arrives at the left ear, creating an Interaural Time Difference (ITD) – which is sometimes referred to as an Interaural Phase Difference (IPD), particularly at lower frequencies where the wavelength is longer than the width of the average human head and therefore the time difference occurs within one cycle.

Secondly, the signal arriving at the right ear has travelled further than the signal arriving at the left ear and will therefore have a lower SPL due to the Inverse Square Law. This creates an Interaural Amplitude Difference (IAD) – which is sometimes referred to as an Interaural Level Difference (ILD).

Thirdly, because the signal at the right ear travels across the listener’s face and enters the right pinna from a different angle than it enters the left pinna, the signal arriving at the right ear will have a different frequency spectrum than the signal arriving at the left ear due to ‘acoustic shadowing’ of the head, hair absorption, skin reflections, diffraction across the face, and the numerous comb filters and cavity resonances introduced by the pinna. All of these result in an Interaural Spectral Difference (ISD).

Collectively, the ITDs, IADs and ISDs are referred to as ‘HRTFs’ (Head Related Transfer Functions), because they represent the changes imposed on the signal as it passes around the listener’s head and into their ears. The ear/brain system uses the differences between the left and right HRTFs to determine where a sound is coming from, i.e. to localise it.

Loudness vs Frequency

An important quirk of human hearing is that its sensitivity to individual frequencies changes with the SPL. At lower SPLs we are less sensitive to low and high frequencies than we are to midrange frequencies, while at higher SPLs our sensitivity to the low and high frequencies increases significantly.

This behaviour is shown in the graph below, which contains a number of ‘Equal Loudness Contours’. Each contour uses a 1kHz tone at a stated SPL as a reference, and shows how much SPL is required for other frequencies to be perceived as being ‘equally as loud’ as the 1kHz reference.

Each contour is labelled with a Phon value, which represents the SPL of the 1kHz reference tone. For example, the 80 Phon contour shows the SPLs required for different frequencies to be perceived as being ‘equally as loud’ as a 1kHz tone that is being reproduced at 80dB SPL. As shown in the graph below, 125Hz will need an SPL of approximately 89dB to be perceived as being ‘equally as loud’ as 1kHz at 80dB SPL. Similarly, 8kHz would need an SPL of approximately 92dB to be perceived as being ‘equally as loud’ as 1kHz at 80dB SPL.

To put it another way, let’s say we had three separate sine wave oscillators: one generating a 1kHz tone, one generating a 125Hz tone and one generating an 8kHz tone. If the 1kHz oscillator’s output was adjusted to provide an SPL of 80dB, the 125Hz oscillator’s output would need to be 9dB higher than the 1kHz oscillator to sound like it is ‘equally as loud’, and the 8kHz oscillator’s output would need be 12dB higher than the 1kHz oscillator to sound like it is ‘equally as loud’. So a 125Hz tone at 89dB SPL, a 1kHz tone at 80dB SPL and an 8kHz tone at 92dB SPL will all have ‘equal loudness’ – but those differences only apply when we’re on the 80 Phons curve (i.e. 1kHz at 80dB SPL). If we change the SPL of the 1kHz tone, the differences required for other frequencies to sound ‘equally as loud’ will also change, as seen by the differing shapes of the Equal Loudness Contours. If they were all the same shape we wouldn’t have to think about how our mix will translate to different playback levels…

The Equal Loudness Contours show us that as the SPL gets higher, the ear becomes more sensitive to low and high frequencies. This means that a mix made at a high monitoring level will sound lacking in low and high frequency energy when heard at a low monitoring level, and a mix made at a low monitoring level will have excessive low and high frequency energy when heard at a higher monitoring level. In other words, the frequency spectrum and tonal balance of our mixes is affected by the monitoring level used when mixing.

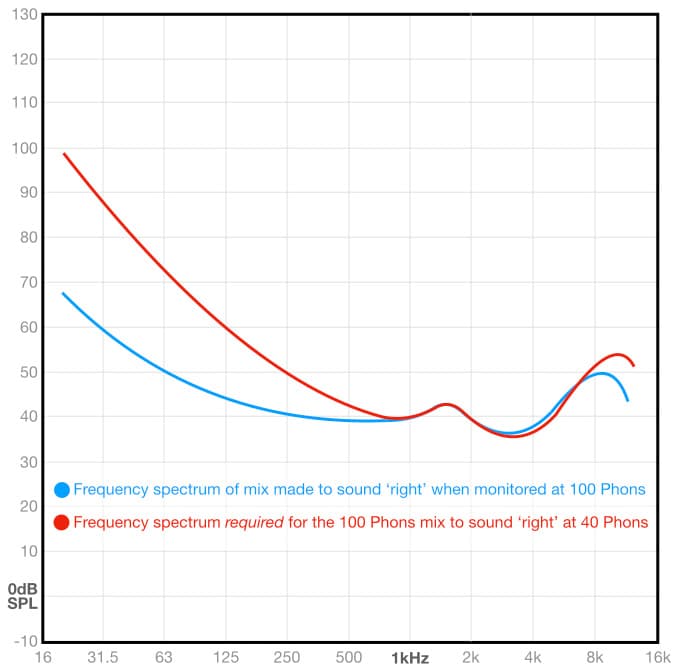

The illustration below shows what happens if we mix at a high monitoring level but play back at a low monitoring level. The blue contour represents the balance of frequencies in a mix made at a high monitoring level of 100 Phons, and the red contour represents how much energy is needed for that mix to have the same perceived frequency balance (i.e. sound the same) when heard at a low monitoring level of 40 Phons. Any frequencies on the blue contour that are below the red contour will be quieter than intended in the mix, and any frequencies on the blue contour that are above the red contour will be louder than intended in the mix.

From the graph above we can see that a mix made at a high monitoring level will be seriously lacking in low and high frequency energy if heard at a low monitoring level. As a matter of interest, this is exactly why some hi-fi systems have a ‘loudness’ button: it boosts the low and high frequencies in a way that looks very similar to the differences between the blue and red contours shown above, allowing the music to be heard at a very low level (to avoid waking up the family, for example) while still having a sufficient perceived balance of low and high frequencies.

The same problem occurs the other way around, as shown below. The blue contour represents the balance of frequencies in a mix made at a low monitoring level of 40 Phons, and the red contour represents how much energy is needed for that mix to have the same perceived frequency balance (i.e. sound the same) when replayed at a high monitoring level of 100 Phons. Any frequencies on the blue contour that are above the red contour will be louder than intended in the mix, and any frequencies on the blue contour that are below the red contour will be quieter than intended in the mix. We can see that a mix made at a low monitoring level will have excessive low and high energy if replayed at a high monitoring level.

Neither of the mixes shown above will translate well to different playback levels – even through the monitors they were mixed on – and both will require corrective EQ in mastering or perhaps even a re-mix, meaning more time and more cost. Mixing loud is proud, mixing quiet is polite, but in both cases you’re mixing on credit: it feels good now but you’ll be paying for it later because for everything else there’s mastering card…

It’s also worth noting that over a long day of mixing our hearing mechanisms become tired, or ‘fatigued’, and this causes us to inadvertently turn up the monitoring level so that things continue to sound exciting. Although our hearing mechanisms suffer from fatigue the Equal Loudness contours remain the same, so by increasing the monitoring level we are inadvertently shifting our hearing ‘baseline’ up to a higher Equal Loudness contour – incurring all the problems that come with that. If you were to mix five songs over a 15 hour day in the studio, it would not be surprising to find that during playback the next day (with rested hearing) the first mix made the day before sounds good, but the last mix made the day before is considerably lacking in low and high frequencies. Why? Because the monitoring level was regularly increasing throughout the day that the mixes were made, so that the last mix was made on a very different equal loudness contour than the first mix.

For these reasons, professional audio facilities calibrate all of their monitoring systems to a standard SPL, typically somewhere around 80 Phons (e.g. the monitoring volume control is adjusted so that a 1kHz tone at -20dBFS or 0dB VU on the stereo bus creates an SPL of 80dB at the monitoring position), which helps to maintain spectral consistency from mix to mix and within a range of playback levels from about 60 Phons to 100 Phons.

The illustration above shows how a mix made at a monitoring level of 80 Phons (blue) translates to higher monitoring levels (100 Phons, green) and lower monitoring levels (60 Phons, red). In both cases, the perceived differences above 500Hz are insignificant. Below 500Hz, when the 80 Phons mix is replayed at 100 Phons there will be a gradual increase in perceived low frequency energy (rising to +10dB at 31.5Hz), and when the 80 Phons mix is replayed at 60 Phons there will be a gradual decrease in perceived low frequency energy (falling to -10dB at 31.5Hz). These changes are not ideal, but they’re acceptable considering they occur over a range of 40dB (from 60 Phons to 100 Phons) and retain very high consistency above 500Hz throughout that range.

80 Phons or thereabouts is also a good level for minimising short-term hearing fatigue and long-term hearing damage, and acts as a warning sign: if the calibrated monitoring volume is not feeling loud enough during a long session, it means either a) the engineer’s hearing is becoming fatigued and it’s time to take a break, or b) the metered level of the mix is lower than the chosen calibration level (typically averaging -20dBFS or 0dB VU) and should be adjusted or compensated for accordingly.

Using a calibrated monitoring level streamlines the entire process from recording to mastering, improves ‘mix confidence’ and translation, and removes the dreaded ‘cold light of day’ disappointment, i.e. the mix that sounded amazing at the end of a long day in the studio sounds underwhelming and disappointing when heard the next morning through fresh ears and at a more civilised (i.e. lower) playback level.

We should always be aware of our monitoring levels, regardless of whether we’re using speakers or headphones. More about that in the final instalment of this series…

As a matter of interest, turning the Equal Loudness Contours upside down, as shown below, allows them to be considered as statistically averaged frequency response graphs of the human ear. This makes it easier to see how the frequency sensitivity of human hearing changes with the SPL.

WHAT DO WE HEAR WITH SPEAKERS?

The illustration below shows the correct configuration for stereo reproduction through speakers, where the acoustic centres of the monitor speakers form two points of an equilateral triangle, and the listener is aligned with the third point. The stereo image is therefore capable of extending across 60° in front of the listener (±30° either side of centre).

The left speaker provides the IADs and ITDs that are embedded into the mix for the left ear, and the right speaker provides the IADs and ITDs that are embedded into the mix for the right ear. A listener in the proper monitoring position receives these signals in the correct relationships to re-construct the stereo image(s) contained within the recording.

Phantom Images

Sound sources are easily localised anywhere in the space between the speakers in a process known as phantom imaging. In the example shown below, the sound source is perceived as coming from the left of centre but there is no sound source in that location. The localised sound is, therefore, a phantom image. When you can hear a sound source where you cannot see one in a stereo image (e.g. a vocal directly in the centre of a stereo system), you are hearing a phantom image. The ability to create a phantom image is the very core of creating a stereo soundstage; without it we’d just have sounds coming from hard left and hard right.

[With the right microphone techniques and/or processing, we can make a sound source appear right in front of our noses, a long way back behind the speakers, or even extend out beyond the sides of the speakers. This type of illusion is also easily created with binaural recordings played through headphones, but that only works when heard through headphones.]

Interaural Crosstalk & More…

Because there is no acoustic isolation between the two speakers, some of the sound intended for the left ear will reach the right ear, and vice versa. This creates a form of interaural crosstalk.

Every stereo mix will contain IADs due to the use of the pan pot and/or panning effects, and it will also have IADs if it has any stereo tracks that were recorded by a coincident (e.g. XY) or near-coincident (e.g. ORTF) pair of microphones. Likewise, every stereo mix will contain ITDs due to the use of stereo time-based effects processors (reverb, delay, etc.), and it will also have ITDs if it has any stereo tracks that were recorded by near-coincident (e.g. ORTF) and/or widely spaced microphone pairs (e.g. AB, drum overheads).

Interaural crosstalk allows the left and right channel IADs and ITDs to blend in the air, reducing the audibility of the differences between them and thereby reducing the perceived width of the stereo image. It can also cause perceived comb filtering if the mix itself contains any delays of 25ms (0.025s) duration or less between the channels.

The Speakers & The Room

Another challenge arises because each speaker remains a distinct sound source and therefore creates its own ITDs and IADs, ultimately telling the ear/brain system that there are really only two sound sources, which conflict with the ITDs and IADs embedded into the mix. These side-effects, and the imaging changes caused by interaural crosstalk described above, are inherently compensated for when mixing through speakers because each signal’s level and panning is adjusted as required to sound right.

Listening through speakers also introduces the possibility of first order reflections from nearby surfaces in the listening space that will be superimposed over the playback and ultimately confuse any spatial information from the recording itself – such problems can greatly interfere with panning decisions. Most listening environments will also have some reverberation of their own, which ultimately affects the levels of reverberation we add to the mix (more about that below). Room reflections and reverberation are addressed with acoustic treatment in a studio control room or in a mixing room, but can be a problem with general listening through speakers outside of the studio environment.

If we introduce enough spatial information (reverberation, etc.) into our mixes the sonic presence of the speakers and the room becomes insignificant – assuming the room is acoustically acceptable to begin with. A good mix transcends the speakers and the room, hopefully invoking a ‘willing suspension of disbelief’ – that feeling when a mix somehow transports you to another place, dimension or world where you cannot see the man behind the curtain.

Visceral Impact

The word ‘viscera’ refers to the soft internal organs of the human body: the lungs, heart, digestive organs, reproductive organs and so on. Therefore, ‘visceral impact’ refers to the impact the sound or mix has on the soft internal organs of our bodies; in other words, how we physically ‘feel’ the sound. Low frequency sounds have the longest wavelengths and generally the highest energy of all sounds in a mix, and therefore provide the most visceral impact.

It is often said that low frequencies stimulate the adrenal glands (located above the kidneys), causing them to generate the hormone ‘adrenaline’ which is responsible for making us want to move and dance when listening to music. However, there is little research to substantiate this. If adrenaline due to visceral impact was a factor required for dancing, then silent discos, silent raves and similar events – which are all based on people dancing to music heard through headphones – would not exist.

WHAT DON’T WE HEAR WITH HEADPHONES?

Headphone listening differs to speaker reproduction much more significantly than most people assume. There are no listening room acoustics to alter the frequency response, there is no interaural crosstalk to mess with the stereo imaging and introduce acoustic comb filtering in the space between the speakers, and there is no visceral impact to add an enhanced/exaggerated sense of excitement.

What we hear when mixing with headphones is the stereo mix directly from our DAW, without any external influences other than the frequency response and distortions of the headphones and the amplifier that is driving them. A good pair of headphones can consistently and reliably deliver frequencies that extend considerably above and below the range of human hearing. It therefore seems logical that a pair of low distortion headphones with a perfectly flat frequency response would provide the ultimate audio reference. Right? The answer is ‘yes’ for listening, but ‘no’ for mixing. Why not?

A mix done on speakers contains compensations for those external influences as they existed in the mixing room (frequency response, room acoustics, interaural crosstalk, visceral impact, etc.), and those compensations ultimately make the mix more resilient – giving it better translation through a wider range of playback systems. Headphone mixing does not have those external influences and therefore our headphone mixes do not compensate or allow for them, resulting in less resilient and more ‘headphone specific’ mixes that do not translate as well to reproduction through speakers and sometimes even through other types of headphones.

ADDING RESILIENCE

What can we do to incorporate those valuable ‘speaker mixing’ compensations into our headphone mixing process and thereby make our headphone mixes more resilient? Let’s start by looking at what headphone manufacturers are doing with frequency responses, then we’ll look at trickier ‘hands on’ mixing problems like making sense of panning in headphones, establishing a reverberation reference when there is no mixing room, and anticipating problems that might be introduced by interaural crosstalk that doesn’t occur when monitoring in headphones.

Frequency Response & Voicing

One of the goals of speaker manufacturers, regardless of whether their products are intended for professional or consumer use, is to create speakers with a relatively flat frequency response from 20Hz to 20kHz. Most studio monitors include their frequency response graph in the documentation that comes with them; it’s rarely a perfectly flat line but if the deviations are gradual and remain within about ±2dB throughout the intended bandwidth the monitors are considered to be acceptable and we can learn to work with them. The illustration below shows the theoretical flat response (from 20Hz to 20kHz) that most speaker manufacturers aspire to (dark red), and the ±2dB window of deviation that is generally considered acceptable (light red).

A good pair of headphones can consistently and reliably deliver frequencies that extend above and below the range of human hearing.

The dominance of speaker listening prior to the ascent of headphone listening means that our impression of what a flat frequency response sounds like has been skewed by the contribution of the listening room acoustics – to the point that, in comparison, a pair of headphones with a flat frequency response will sound excessively bright while also lacking in low frequency energy. In comparison to speakers, the sound from the headphones does not get the high frequency attenuation that occurs as sound passes through the air along with the absorption by soft furnishings in the room (hence the excessive brightness), and it does not get the low frequency enhancement from the listening room’s resonant modes (hence the lack of low frequencies).

Due to these differences, contemporary headphones are not designed to have a flat frequency response. Rather, they’re ‘voiced’ (i.e. their frequency response has been moved away from the theoretical ideal of ‘flat’) so that they sound like speakers with a flat frequency response. Hence we see headphone marketeers using descriptive phrases like ‘neutral tonality’ and ‘voiced to sound natural’, rather than showing frequency response graphs – because such graphs would alarm anybody who expected to see a perfectly straight line.

Here’s the concept: start with a speaker with a flat frequency response, place a measurement microphone in front of it at the distance a typical listener would be, run a frequency sweep through the speaker, and capture it with the microphone. The result is the ‘flat’ frequency response as it is reproduced by the speaker and captured at the listening position. Build that frequency response into the headphones and they should sound like speakers with a flat frequency response.

This seems simple enough, but it raises questions about the kind of room that should be used for such measurements because the room acoustics influence the sound captured by the microphone.

Throughout the 1970s it was standard practice to use an anechoic chamber, thereby creating a ‘free-field’ environment where the only sound to reach the microphone was the direct sound from the speaker with no contribution from the room itself (i.e. no resonances, no reflections and no reverberation) other than the loss of high frequencies over distance through the air. This was known as ‘free-field equalisation’ and, not surprisingly, headphones that use ‘free-field equalisation’ sound rather like listening to a speaker with a flat frequency response placed in an anechoic chamber. It was an improvement over the sound of headphones with the theoretically perfect flat response, but it still did not correlate well with speaker listening because nobody listens to speakers in an anechoic environment. The headphone designers had the right idea, but there was more work to be done…

In an attempt to create something that correlated better with speakers, the 1980s saw the introduction of ‘diffuse-field equalisation’ – a method that is still popular. A ‘point-source’ loudspeaker (i.e. a speaker that radiates frequencies equally well in all directions), with a flat frequency response, is placed in a reverberation chamber rather than an anechoic chamber. A frequency sweep is reproduced by the speaker and captured by a dummy head placed at a sufficient distance to ensure it is in the diffuse field (i.e. where the room’s reverberation is the dominant sound). This measurement provides the frequency response the headphones are voiced to reproduce. Many critically-acclaimed and widely-adopted headphones conform to the diffuse-field equalisation curve.

More recently, tests by Dr Sean Olive and others working for Harman International (parent company of AKG, Crown, dbx, JBL, Lexicon, Soundcraft, Studer et al) replaced the free-field environment and the diffuse-field environment with what was generally considered to be a good sounding listening room. The results were then combined with the results of tests in which numerous listeners were asked to audition and rate their preferences for numerous headphones with different frequency responses. These tests and measurements resulted in the Harman target curve (aka the ‘Harman Curve’), as shown below:

The Harman Curve is hardly ‘flat’, but it significantly closes the tonality gap between headphone and speaker reproduction, and provides very good tonal translation between them. Neumann seem to have taken this approach one step further by using their KH series monitors as the reference speakers for voicing their NDH headphones, resulting in headphones that correlate remarkably well with their monitors and minimise the ‘line-of-best-fit’ compromising that occurs when an instrument in the mix is too loud on one set of monitors but too soft on another set of monitors. This level of correlation between monitor speakers and headphones is something that very few other manufacturers can offer because most headphone manufacturers don’t make studio monitors, and most studio monitor manufacturers don’t make headphones.

Mixing on headphones that are voiced this way (i.e. to sound like speakers with a flat response in a good room) will usually result in better translation to speaker playback in terms of tonality, and solves one of the major differences between speaker mixes and headphone mixes. However, it does not resolve spatial disparities such as panning and reverberation levels, and it doesn’t counter the effects of interaural crosstalk. Solving and/or compensating for those problems requires a more strategic approach…

Panning Compensation

As discussed earlier, speaker listening creates a stereo image that can be up to 60° wide (±30°, with 0° being directly in front of the listener). A sound panned hard left should appear at 30° to the left of centre (i.e. coming directly from the left studio monitor), and a sound panned hard right should appear at 30° to the right of centre (i.e. coming directly from the right studio monitor).

In comparison, headphone listening creates a stereo image that can be up to 180° wide (±90°), depending marginally on the placement of the drivers within the ear cups. A sound that is panned hard left will be 90° to the left of the centre (coming directly from the left ear cup) and a sound that is panned hard right will be 90° to the right of centre (coming directly from the right ear cup).

The difference between the widths of their stereo soundstages can be represented as a ratio of 180:60, or 3:1, meaning the soundstage width of a headphone mix needs to be approximately 3x wider than it is expected to be when heard through speakers. This is an important consideration when mixing on headphones, because a sound that is panned to 45° to the left of centre when heard in headphones will be heard at 45°/3 = 15° to the left when heard through speakers.

Although headphone monitoring exaggerates panning when compared to speaker monitoring, both monitoring systems downplay panning positions when compared to the visual placement indicated by the pan pot – which rotates through a range of 270° (±135°). The panning ratios between the pan pot, headphones and studio monitors are therefore 270:180:60, or 4.5:3:1. From the point of view of a mixing engineer sitting in the stereo sweet spot, a hard left pan will be seen at 135° to the left on the pan pot, but will be heard at 90° to the left on headphones and heard at 30° to the left through studio monitors. (To add the confusion, it will be shown at 45° to the left on a goniometer, but more about that later…)

For any given headphones, it is always good procedure to start a mix by establishing the location of five reference points across the stereo soundstage – hard left, mid-left, centre, mid-right, hard right – and reminding ourselves of where those locations appear on the pan pot. This is especially important if we’re new to headphone mixing and intuitively pan sounds by ear to the same locations we’re used to hearing them when mixing through speakers. This will result in a very narrow soundstage when heard in speakers because speaker playback reduces the width of a headphone mix by a factor of 3:1, as mentioned earlier.

Remember, the stereo soundstage on headphones is approximately 3x wider than it is on speakers. So, if you want a sound to appear 15° to the left (i.e. mid-left) when heard through speakers, you have to pan it 45° to the left (i.e. 3 x 15°) if mixing on headphones.

One interesting aspect of headphone mixing related to panning is the location of ‘centre’. Depending on the headphones and the listener, ‘centre’ often appears to be inside the listener’s head or directly above it. To overcome this problem, many contemporary headphone designs use angled drivers and/or angled ear pads to place the drivers slightly forward of the ear canal. This allows the pinnae to create subtle ISDs that place the soundstage in front of the listener, at the possible expense of a minor reduction in the width of the stereo soundstage.

Reverberation Compensation

Every well-designed mixing room conforms to a reverberation curve that ensures a level of background reverberation representing an idealised real-world listening environment. Among other things, this creates a ‘reverberation reference’ to balance the levels and times of our reverberation effects against, ensuring they are not significantly lower, higher, longer or shorter than intended when the mix is taken out of the room and played in the real world.

It is commonly believed that if we mix in a room that does not have enough reverberation of its own, we will add too much reverberation to our mixes to compensate. The same thinking implies that if we mix in a room that has too much reverberation of its own we won’t add enough reverberation to our mixes. Although this appears to make sense, it ignores the ear/brain’s remarkable ability to distinguish between the reverberation of the mixing room and the reverberation added to the mix. The mixing room’s reverberation is not necessarily heard as part of the mix’s reverberation, but it does provide a masking effect that the reverberation in our mixes needs to overcome. The result is as follows…

If we mix through speakers in a room that has a particularly low reverberation reference, the levels of the reverberation effects we add to the mix might not be high enough because they are easily heard over the room’s reverberation reference. Likewise, the reverberation times we choose might be too short because the room’s low reverberation reference makes it easier to hear the added reverberation tails for longer.

Similarly, if we mix through speakers in a room that has a particularly high reverberation reference, the levels of the reverberation effects we add to our mix might be too high in order to be heard over the room’s high reverberation reference. Likewise, the reverberation times we choose might be too long because the room’s high reverberation reference makes it harder to hear the added reverberation tails for the desired time.

The illustration below illustrates this problem. The upper graph shows reverberation (green) being added to a mix in three different mixing rooms: one with a very low reverberation reference, one with a good reverberation reference, and one with a very high reverberation reference. Each room’s reverberation reference level is shown in grey, and in each case the mix reverberation (green) has been added at an appropriate level and duration to achieve the same perceived level and duration in each room – represented by the green area above the grey areas.

The lower graph shows what happens when each mix is replayed in a room with a good reverberation reference. The first mix’s reverberation is inaudible, resulting in a very ‘dry’ mix. The second mix is consistent with the upper graph. The third mix’s reverberation is too high, resulting in a very ‘wet’ mix.

Headphone listening is not affected by the mixing room’s acoustics and therefore has no reverberation reference, making it harder to judge and set reverberation levels and times in a way that translates well to speakers. A mix made entirely through speakers in an acoustically-designed mixing room translates to headphones with no surprises in reverberation levels or times because the reverberation effects have been balanced against the room’s reverberation reference. However, a mix made entirely through headphones could have surprising changes of reverberation levels when heard through speakers because it has been made with no reverberation reference. What sounds ‘just right’ when mixed in headphones is often too low when heard through speakers.

We can solve the reverberation reference problem when mixing with headphones by using a reference track as a reality check, which we’ll talk more about in the next instalment.

Interaural Crosstalk Compensation

When mixing with headphones we cannot predict what changes will happen to our mix when the left and right signals combine together in the air and at the ears. As mentioned earlier, this is known as ‘interaural crosstalk’ and is an unavoidable part of speaker monitoring: some of the left channel’s signal will enter the right ear, and some of the right channel’s signal will enter the left ear.

Interaural crosstalk can affect the perceived levels and panning of individual instruments in our stereo mix, it can introduce comb filtering, and it can alter the perceived level of reverberation and similar stereo time-based effects.

The easiest way to check for the effects of interaural crosstalk when mixing with headphones is to check the mix in mono. This creates a ‘worst case’ crosstalk scenario (i.e. both channels are completely added together) that will exaggerate any level changes or comb filtering issues that might occur when the mix is heard through speakers. Subtle changes in individual signal levels within the balance are to be expected, but can also be indicators of hidden weaknesses in the mix that are worth addressing and fine-tuning. For example, sounds in the stereo mix that become too loud or too soft when monitored in mono are probably not at the right level in the stereo mix, and should be adjusted accordingly. A headphone mix that sounds acceptable when monitored in stereo and acceptable when monitored in mono stands a good chance of sounding acceptable when heard through speakers, too.

Another useful tool for revealing potential interaural crosstalk problems in a headphone mix is the goniometer, also known as a correlation meter – a popular prop in old science fiction movies. More about that useful tool in the next instalment…

Await the 3rd instalment

Third and fourth are now live, fifth and sixth coming soon!

Great article Greg, but I have to say that politically it’s best to avoid the Hard Left and the Hard Right

Yes indeed Rick, LOL! It is always best to avoid argumentative mixes. For this I use the Leaner plug-in and adjust the Bias appropriately. Sometimes I raise the threshold nice and high on the fact gate so I don’t hear anything in the mix that I don’t want to hear. 🙂